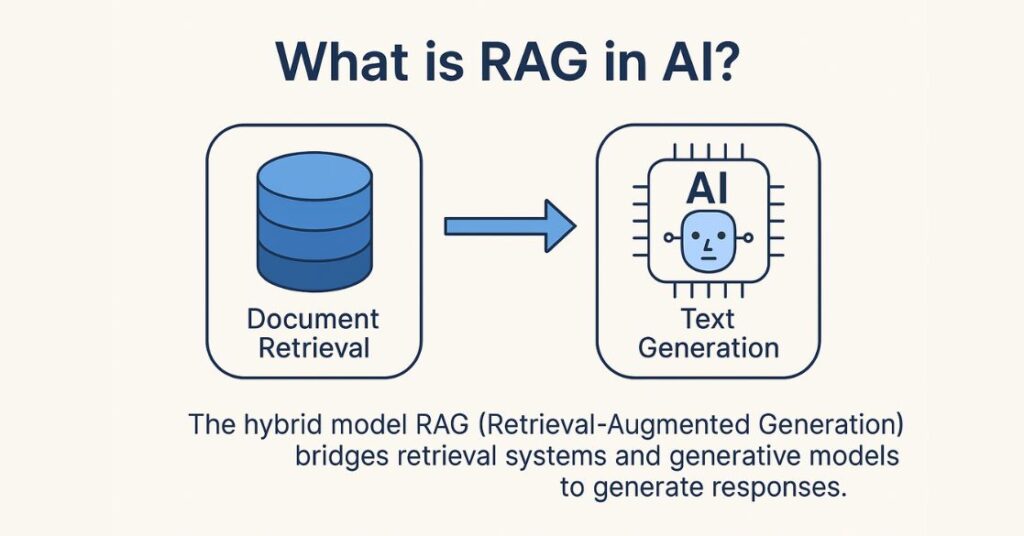

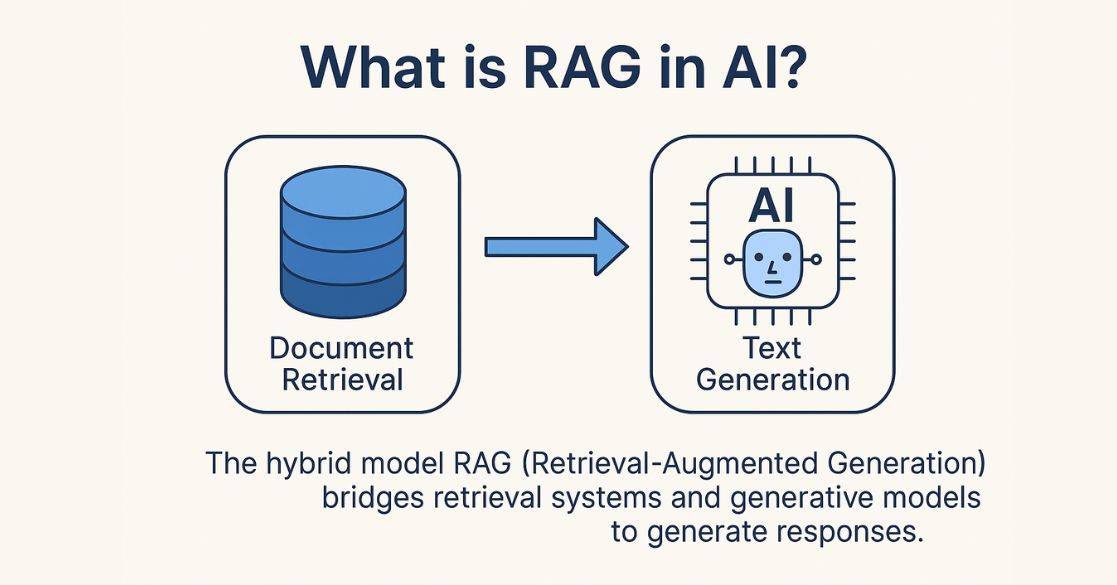

Retrieval-Augmented Generation (RAG) models represent a significant advancement in natural language processing by bridging the gap between pure generative models and retrieval-based systems. Unlike traditional language models that generate text solely based on patterns learned during training, RAG models actively seek out relevant external information to enhance the factual accuracy and contextual relevance of their outputs.

At the heart of RAG is a two-step process:

- Retrieval Phase: Given an input query or prompt, the model first queries an external knowledge source, such as a large corpus of documents, databases, or knowledge bases. This is done using a retriever component, which identifies the most relevant documents or passages related to the query. The retriever often uses embedding-based similarity search or traditional information retrieval techniques to find pertinent texts quickly and efficiently.

- Generation Phase: The retrieved texts are then passed along with the original query to a generative model—usually a transformer-based language model like GPT. This generator integrates the retrieved information into the context used for producing the final response. Essentially, the model “grounds” its generated answer on real, externally sourced data, reducing hallucinations and outdated knowledge.

Why RAG Models Are Important

- Enhanced Accuracy: Since RAG models pull fresh, relevant information from external sources, they can provide more precise and up-to-date answers than standard generative models limited by their training data cutoffs.

- Scalability and Flexibility: Instead of retraining a large model every time new information is added or changed, RAG leverages dynamic retrieval, meaning it can adapt to new knowledge by updating or expanding the document corpus alone.

- Reduced Hallucination: By grounding responses in retrieved documents, RAG reduces the risk of fabricating facts, a common challenge in purely generative models.

- Broad Applicability: These models shine especially in domains requiring current information, like customer service bots, personalized assistants, academic research tools, and enterprise knowledge management systems.

Technical Architecture Overview

- Retriever: This can be a dense retriever like a pretrained bi-encoder model that converts queries and documents into vectors for similarity matching, or a sparse retriever based on keyword matching or TF-IDF. The choice affects retrieval speed and accuracy.

- Generator: Typically a sequence-to-sequence transformer model (e.g., BART or T5), or an autoregressive model such as GPT, generates natural language output conditioned on both the input query and the retrieved context.

- End-to-End Training: Some RAG implementations train the retriever and generator jointly to optimize overall performance, while others keep them separate for modularity.

Example Use Case

Imagine a virtual assistant helping users with technical support. Instead of relying on a static knowledge base or embedded information, a RAG model fetches the latest troubleshooting guides or documentation relevant to the user’s query and incorporates that information to provide an accurate, step-by-step solution tailored to the problem.

In summary, RAG models synthesize the best of retrieval and generation by dynamically incorporating external knowledge into the response generation process, leading to smarter, more factual, and context-aware AI systems. This approach is revolutionizing how AI handles information-intensive tasks and is rapidly gaining adoption across industries that depend on reliable, real-time knowledge.